curl https://www.sqlite.org/snapshot/sqlite-snapshot-202002271621.tar.gz | tar -xzv

cd ./sqlite-snapshot-202002271621 &&

./configure --disable-fts4 --disable-fts5 --disable-json1 --disable-rtree

make && strip -d ./.libs/libsqlite3.a ./.libs/libsqlite3.so.0.8.6

ls -hs ./.libs/

1.1M libsqlite3.a

868K libsqlite3.so.0.8.6IOWOW

The persistent key/value storage engine based on Skiplist data structure.

Key features

Ultra-fast storage engine

Tiny embeddable C11 Library

Online backups support

Single data file for any number of key/value sub-databases

Motivation and history

IOWOW was originally developed for use in our embeddable JSON database engine EJDB2 Previous version of EJDB 1.x based on TokyoCabinet (TC) engine and gained some popularity. Due to LGPL licensing, technical limitations and lack of support by TC developers. We did complete redesign of engine and implemented it on top of our home grown key value database — https://iowow.io

What we are looking for?

We need a free and lightweight open sourced database engine which manages all data in a single file.

Why single data file is good for embeddable database?

It’s convenient for distribute data file as single asset between users and devices

Lesser chance to get data inconsistency on file system as result of operation on set of database files

Easier to develop data checking, migration and recovery tools

We need a database engine able to run on wide range of operating systems and hardware architectures. (x86/64, arm, mips).

Linux

macOS

FreeBSD

Windows

OpenWrt

Android

iOS

We need engine with liberal open source license. What does it mean?

Liberal license allows free use of software for practically any purpose

License may contains obligations concerning copyright notice and references to names of authors but no more

Any copyleft licenses are not liberal from our point of view. Any licenses with crazy obligations to source code (like this), licenses having restrictions on ways of source code distribution and so on are not liberal.

Requirements summary

Be open sourced and completely free for any use

Be fast and lightweight — no more than few hundreds kilobytes of linked code

Compatible with many ABIs and language bindings

Database data is located in single file

Support of data durability and fault recovering

Able to run on resources limited systems, mobile devices

Trying avoid reinvention of a wheel

SQLite?

Single file

Implemented in C language

Estimate library size overhead

Support of SQL and transactions isolation costs price. According to IOArena benchmarks simple CRUD performance may be

~5-10xslower comparing to other key value stores.

Trying avoid reinvention of a wheel

Berkeley DB?

Good performance according to our micro-benchmark

Not liberal AGPL license also licensed as commercial Oracle product

Single file

Implemented in C language

Anything else?

Good performance especially on large data sets

Not liberal GPL license also licensed as commercial Mongodb product

Server side oriented

Big binary artifact size ~3M

Good performance in benchmarks

Good OpenLDAP license

Single file

Readers and writer transactions are isolated

The database file will never shrink

You have to preallocate database to the maximum estimated data size on Windows platform

Not good performance of insert/update operations

Liberal BSD 3-Clause license

Small binary artifacts size ~400K

LevelDB successor developed by Facebook

Not liberal GPL license

Good performance especially on large data sets

Huge binary artifacts size ~20M

Works on Android

Out of review

There are number of remarkable database engines skipped in this presentation.

SophiaDB — predecessor of database backend for Mail.ru Tarantool engine

With IOArena you can compare of IOWOW against SQLite3, SophiaDB, UnQLite, RocksDB, WiredTiger, LMDB, LevelDB.

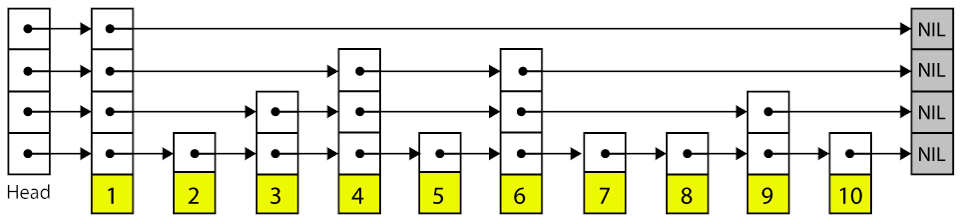

IOWOW architecture

Why we have developed IOWOW persistence layer based on Skiplist data structure?

Due to planar (non hierarchial nature) of skiplist an actual implementation may be much more simpler than B+ tree and LSM trees. Take a look on BerkeleyDB/tree/master/src/btree It ~600Kb in total which ~5x times bigger than whole IOWOW/src/kv database implementation

Skiplist is very effective for sequential scans, it is very important feature since high ratio of database queries don’t use indexes

Create a persistent skiplist implementation competitive to B+/LSM trees is very interesting and challenging task. Looking back I can say that I’m succeeded with that

Skiplist drawbacks

Not so CPU/data cache friendly comparing to B+ trees

Bigger storage overhead than B+ and LSM trees

So why the size of iowow database file limited to ~512Gb, It is the result of tradeoff between data overhead and max database size

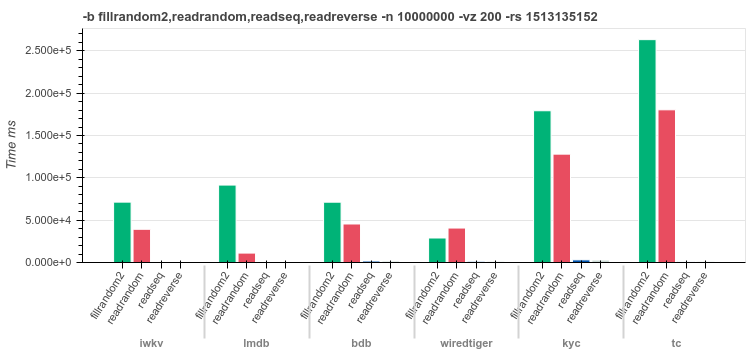

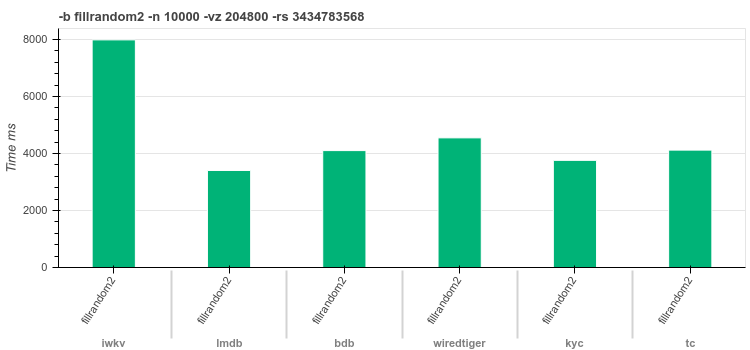

Benchmarks

It is quite hard to provide fair database benchmarks since benchmarks are highly dependent on hardware configuration, database parameters, compiler, extra libraries, build flags, workload and so on. For example one engine may be efficient on small keys while on large keys perform poorly. IOWOW benchmarked against two suites:

Next slides show single threaded micro-benchmarks

IOWOW (iwkv)

LMDB

BerkeleyDB (bdb)

KyotoCabinet (kyc)

WiredTiger

TokyoCabinet (tc)

Benchmarks

LevelDB was excluded from the latest benchmarks since it shows very poor update/insert performance comparing to competitors

Benchmarks environment

CPU 8x Intel(R) Core(TM) i7-4770 CPU @ 3.40GHz RAM 16320MB SSD Micron C400 RealSSD 256GB Filesystem ext4 OS Ubuntu 19.10

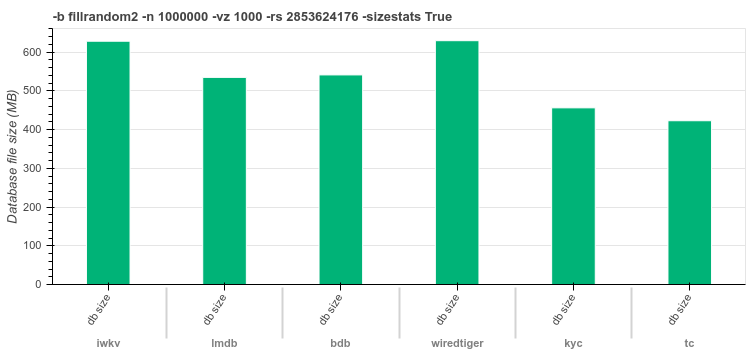

Storage efficiency

Insert 1M records

16byte keysRandom values of

[0, 1000]bytes length

16byte keysRandom values of

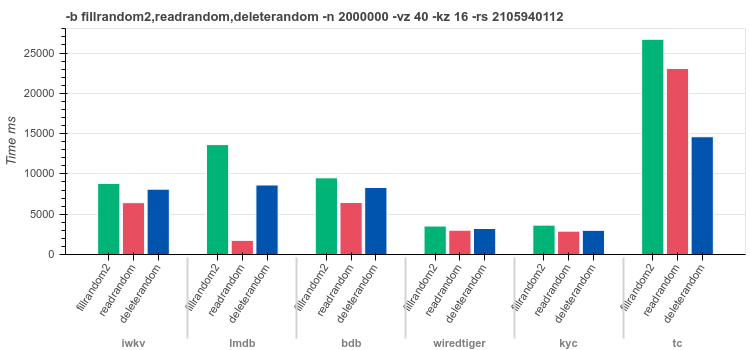

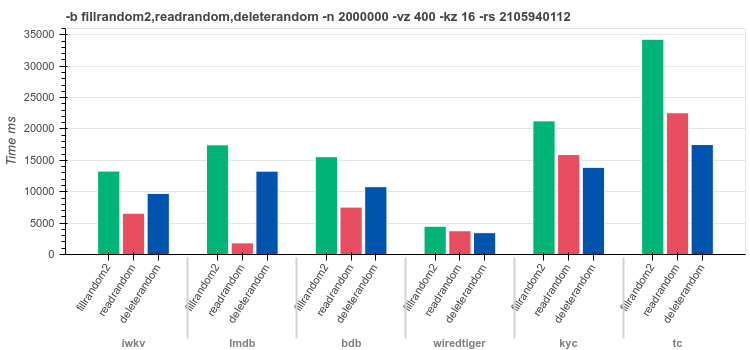

[0..40]bytes lengthfilrandom2 - insert

2Mrandom distributed recordsreadrandom - read

2Mrecords in random orderdeleterandom - delete

2Mrandom keys

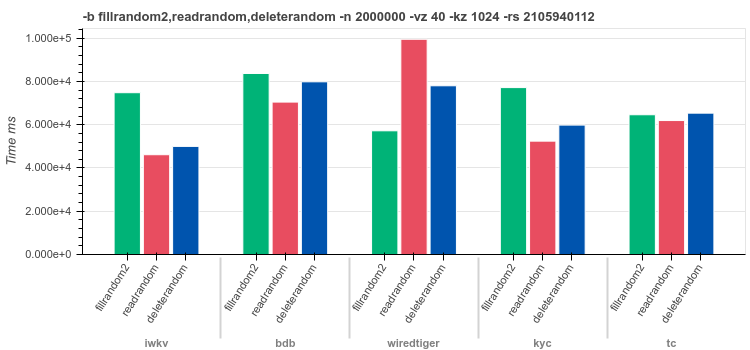

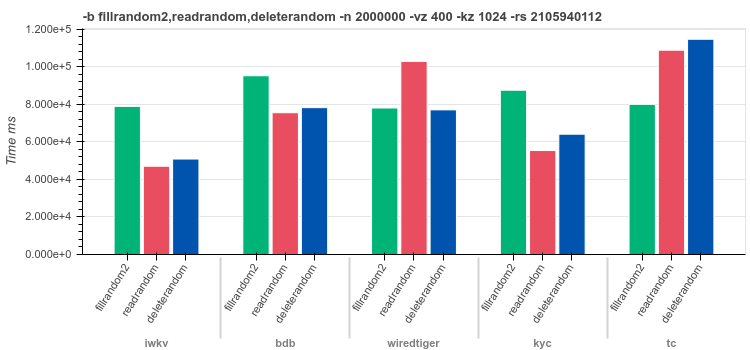

1024byte keysRandom values of

[0..40]bytes lengthfilrandom2 - insert

2Mrandom distributed recordsreadrandom - read

2Mrecords in random orderdeleterandom - delete

2Mrandom keys

LMDB is out of scope since keys greater than 511 bytes are not supported by default

16byte keysRandom values of

[0..400]bytes lengthfilrandom2 - insert

2Mrandom distributed recordsreadrandom - read

2Mrecords in random orderdeleterandom - delete

2Mrandom keys

1024byte keysRandom values of

[0..400]bytes lengthfilrandom2 - insert

2Mrandom distributed recordsreadrandom - read

2Mrecords in random orderdeleterandom - delete

2Mrandom keys

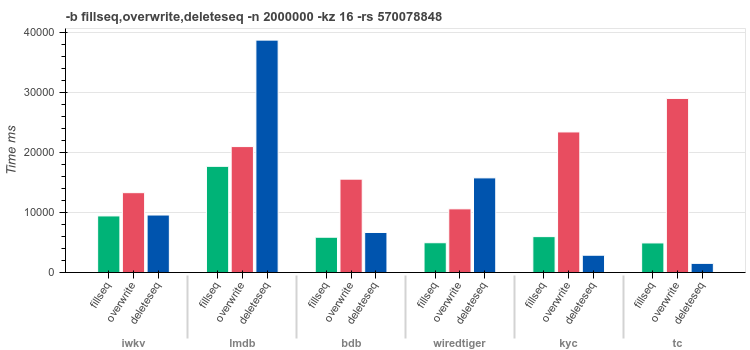

16byte keys400byte valuesfilseq - insert

2Mrecords in ascending orderoverwrite - overwrite

2Mvalues for random keysdeleterandom - delete

2Mrandom keys

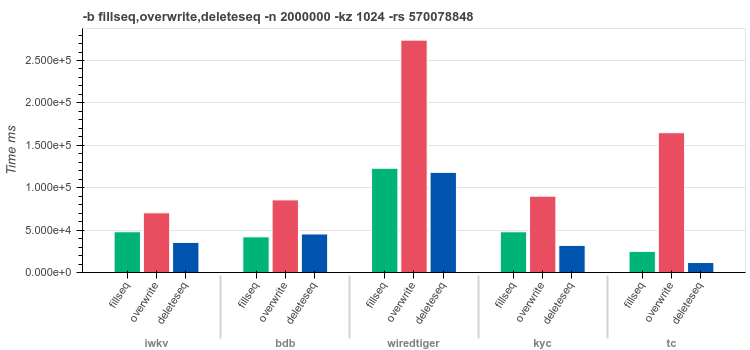

1024byte keys400byte valuesfilseq - insert

2Mrecords in ascending orderoverwrite - overwrite

2Mvalues for random keysdeleterandom - delete

2Mrandom keys

16byte keysRandom values of

[0..400]bytes lengthfilrandom2 - insert

10Mrandom distributed recordsreadrandom - read

10Mrecords in random orderreadseq - read

10Mrecords sequentiallyreadreverse - read

10Mrecords sequentially in reverse order

16byte keys200Kbvaluesfilrandom2 - insert

10Krandom distributed records

IOArena benchmarks

IOArena is an utility designed for evaluating performance of embedded databases. This tool able to run multi-threaded benchmarks and measure not only total time of test performed but throughput and latency.

git clone https://github.com/pmwkaa/ioarena

cd ioarena && mkdir build && cd build

cmake .. -DENABLE_IOWOW=ON -DENABLE_WIREDTIGER=ON -DENABLE_ROCKSDB=ON \

-DENABLE_LMDB=ON -DENABLE_LEVELDB=ON -DENABLE_UNQLITE=ON \

-DENABLE_SOPHIA=ON -DENABLE_SQLITE3=ON

make

src/ioarena -hIOArena benchmarks

IOWOW Internals

IOWOW weak points

Limited scalability in multithreaded environment: RW locks used, single file access

Maximum database size is limited to

512GbIOWOW is not intended for big-data

Isolated transactions are not supported

IOWOW strong points

Tiny library size ~

220KSupport of online backups

Support of multiple sub-databases in one file

Competitive performance

Room for improvements

Use data compression - skiplist nature allows simple ways to do that

Work on performance of data update operations

Create more effective caching of data

Research ways of concurrent data modification